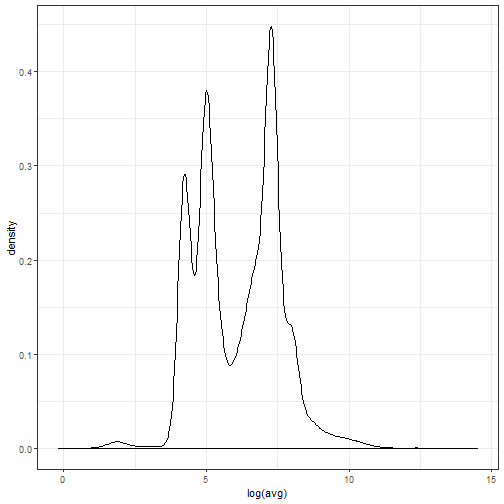

class: center, middle, inverse, title-slide # Machine learning in R - Day 3 ## Hands-on workshop <html> <div style="float:left"> </div> <hr align='center' color='#116E8A' size=1px width=97%> </html> ### Katrien Antonio, Jonas Crevecoeur and Roel Henckaerts ### <a href="https://katrienantonio.github.io">NN ML workshop</a> | February 11-13, 2020 --- # Today's Outline .pull-left[ * [Introduction](#introduction) - Unpacking our toolbox - Tensors * [Neural network fundamentals](#fundamentals) - Essential concepts - Model building and training in {keras} - Building your first ANN - Evaluating your model - Interpretation tools * [Auto encoders](#autoencoder) - Data compression and feature extraction - Evaluation ] .pull-right[ * [Convolutional neural networks](#cnn) - Handling new data formats - Convolutional layers explained - Evaluation and intepretation * [Regression with neural networks](#regression) - Redefining GLMs as a neural network - Including exposure - Case study ] --- name: map-ML-world class: right, middle, clear background-image: url("Images/map_ML_world.jpg") background-size: 45% background-position: left .KULbginline[Some roadmaps to explore the ML landscape...] <img src = "Images/AI_ML_DL.jpg" height = "350px" /> .font60[Source: [Machine Learning for Everyone In simple words. With real-world examples. Yes, again.](https://vas3k.com/blog/machine_learning/)] --- name: map-ML-world class: right, middle, clear background-image: url("Images/main_types_ML.jpg") background-size: 85% background-position: middle --- class: inverse, center, middle name: introduction # Introduction <html><div style='float:left'></div><hr color='#FAFAFA' size=1px width=796px></html> --- # Getting started <br> Download the [GitHub repository](https://github.com/katrienantonio/workshop-ML) for this course and open the Rproject file .KULbginline[Neural networks.Rproj]. Today's session will make extensive use of {keras} and {tidyverse}. <br> Don't forget to load these packages in your R session. ```r require(keras) require(tidyverse) ``` .pull-left[ .center[ <img src = "Images/keras.jpg" height="200px" /> ] ] .pull-right[ .center[ <img src = "Images/tidyverse.png" height="200px" /> ] ] --- # The programming framework for today <br> <img src="Images/modelflow.png" width="600" height="100" style="display: block; margin: auto;" /> <br> * Tensorflow: <br> Open source platform for machine learning developped by google. * Keras: <br>An inuitive high level interface for Tensorflow. * R: <br>Our favorite programming language. --- # Why is it called tensorflow? <br> Untill now, all the .hi-pink[input] features of our model were individual data points, i.e. .hi-pink[zero dimensional]: <!-- `$$\text{age_car = 5}, \quad \text{fuel = gasoline}, \quad \text{bm = 10}$$` --> .center[ `age_car = 5`, `fuel = gasoline`, `bm = 10` ] <br> In a .hi-pink[big data world] with structured and unstructured data, our .hi-pink[input] can be a * time series: 1-dimensional, * sound fragment: 2-dimensional, * image in color: 3-dimensional, * movie: 4-dimensional, * ... <br> We require a framework that can flexibly adjust to all these data structures. --- # Why is it called tensorflow? (cont.) .hi-pink[Tensorflow] is this flexible framework which consists of highly optimized functions based on .hi-pink[tensors]. What is a .hi-pink[tensor]? * A 1-dimensional tensor is a vector <img src="Images/neural_networks_unnamed-chunk-4-1.png" width="300" height="20" style="display: block; margin: auto;" /> * A 2-dimensional tensor is a matrix <img src="Images/neural_networks_unnamed-chunk-5-1.png" width="300" height="100" style="display: block; margin: auto;" /> * ... Many matrix operations, such as the matrix product, can be generalized to tensors. Luckily Keras provides a high level interface to Tensorflow, such that we will have only minimal exposure to tensors and the complicated math behind them. --- # Tensor functions .pull-left[ Keras generalizes common R functions for inputs of type tensor. These functions can be recognized by the prefix `k_`. See the [Keras documentation](https://keras.rstudio.com/articles/backend.html#backend-functions) for a list of all tensor functions. * `k_constant`: create and initialise a tensor. ```r x <- k_constant(c(1,2,3,4), shape = c(2,2)) x ``` ``` ## tf.Tensor( ## [[1. 2.] ## [3. 4.]], shape=(2, 2), dtype=float32) ``` ] .pull-right[ Most tensor operations require an axis parameter to specify the dimensions over which the function should be performed. * `k_mean`: calculate the mean of the tensor. ```r k_mean(x, axis = 1) ``` ``` ## tf.Tensor([2. 3.], shape=(2,), dtype=float32) ``` ```r k_mean(x, axis = 2) ``` ``` ## tf.Tensor([1.5 3.5], shape=(2,), dtype=float32) ``` ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> In this warm up exercise you .KULbginline[create a tensor] and .KULbginline[apply basic tensor functions]. <br> <br> * Create a 3-dimensional tensor in R with values `1,2,...,8` and shape `(2, 2, 2)`. * Calculate the logarithm of this tensor. * Calculate the mean of this tensor over the third axis. ] --- class: clear .pull-left[ ```r require(keras) x <- k_constant(1:8, shape = c(2,2, 2)) *k_log(x) ``` ``` ## tf.Tensor( ## [[[0. 0.6931472] ## [1.0986123 1.3862944]] ## ## [[1.609438 1.7917595] ## [1.9459102 2.0794415]]], shape=(2, 2, 2), dtype=float32) ``` ```r k_mean(x, axis = 3) ``` ``` ## tf.Tensor( ## [[1.5 3.5] ## [5.5 7.5]], shape=(2, 2), dtype=float32) ``` ] .pull-right[ `log(x)` would have also resulted in the correct answer.<br>However, it is best practice to use `k_log`, since the R-function `log` can not be evaluated within tensorflow: ```r require(tensorflow) tf$\`function`(k_log)(x) ``` ``` ## tf.Tensor( ## [[[0. 0.6931472] ## [1.0986123 1.3862944]] ## ## [[1.609438 1.7917595] ## [1.9459102 2.0794415]]], shape=(2, 2, 2), dtype=float32) ``` ```r tf$\`function`(log)(x) ``` ``` ## Error in py_call_impl(callable, dots$args, dots$keywords) : <br>Unable to convert R object to Python type ``` ] --- class: inverse, center, middle name: fundamentals # Neural network fundamentals <html><div style='float:left'></div><hr color='#FAFAFA' size=1px width=796px></html> --- # The MNIST dataset We will use the [MNIST dataset](http://yann.lecun.com/exdb/mnist/), which contains 70.000 labeled images of handwritten digits. .pull-left[ Each image is stored as a 28x28 intensity matrix. <img src="Images/neural_networks_example_mnist_input-1.png" width="360" height="360" style="display: block; margin: auto;" /> ] .pull-right[ Recognizing that the images below all represent the digit 8 is trivial for humans, but difficult for computers. <img src="Images/neural_networks_plot_example_mnist-1.svg" height="150" style="display: block; margin: auto;" /> Neural networks are ideal for situations where the relation between the input (intensity matrix) and the output (0-9) is complicated. ] --- # Loading the MNIST dataset .pull-left[ `dataset_mnist()` retrieves the MNIST dataset from the online repository. Alternatively the dataset can be loaded from the course directory. ```r # download the dataset from the online repository mnist <- dataset_mnist() # load the dataset from the course files load('data/mnist.RData') ``` Assign new names to the input and output data. ```r input <- mnist$train$x output <- mnist$train$y test_input <- mnist$test$x test_output <- mnist$test$y ``` ] .pull-right[ The input data is an `array`: ```r class(input) ``` ``` ## [1] "array" ``` with 60.000 28x28 images: ```r dim(input) ``` ``` ## [1] 60000 28 28 ``` Select the first image: ```r input[1,, ] ``` ] --- # Neural networks <br> In a neural network, **.green[input]** travels through a sequence of **.blue[layers]**, and gets transformed into the **.red[output tensor]**. .pull-left[ <img src="Images/example_neural_network.png" width="400" height="250" style="display: block; margin: auto;" /> ] .pull-right[ This sequential, layer structure is at the core of the Keras libary. ```r model <- * keras_model_sequential() %>% layer_dense(...) %>% layer_dense(...) ``` ] <br> .hi-pink[Layers] consist of .hi-pink[nodes] and the .hi-pink[connections] between these nodes and the previous layer. --- # Meet the neural networks family <div style='height:100%; overflow:scroll;'> <img src = "Images/neural_network_types.png" /> </div> --- # Nodes .pull-left[ Nodes (called neurons) contain a numeric value. <br><br> In this course we will mostly consider nodes with values between zero and one. * zero: the feature is not present in the data * one: the feature is present in the data <img src="Images/neural_network_node.png" width="300" height="100" style="display: block; margin: auto;" /> ] .pull-right[ In our example, the input layer consists of 784 (=28 x 28) nodes. <img src="Images/neural_networks_example_mnist_input-1.png" width="450" height="450" style="display: block; margin: auto;" /> ] --- # The output layer <br> The output layer in our first example will consist of 10 nodes (1 per digit). <br> The values of these nodes will sum to one, such that we can interpret the outcomes as .hi-pink[probabilities]. <img src="Images/neural_network_output_prob.png" width="700" height="100" style="display: block; margin: auto;" /> <br> .hi-pink[Interpretation]: The model assigns a probability of 80% to the image representing a two. --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> We have proposed a structure with 10 nodes for the output layer. <br> <br> .hi-pink[Q]: We list some other structures for encoding the output variable. What do you think are the .hi-pink[advantages] and .hi-pink[disadvantages] of each representation? * Continuous encoding in 1 node. * Binary encoding in 4 nodes, i.e. 6 = 0110. * 9 nodes for levels 1-9 with zero as the default category. ] --- # Hidden layer <br> All layers between the input and output layer are called .KULbginline[hidden layers].<br>Nodes in the hidden layer(s) represent intermediary features that we don't explicitely define. <br> We let the model decide the optimal features. <img src="Images/neural_network_digital.png" width="700" height="100" style="display: block; margin: auto;" /> Recognizing a digit is more difficult than recognizing a horizontal or vertical line. <br> Hidden layers automatically split the problem into smaller problems that are easier to model. <img src="Images/neural_network_digital_example.png" width="700" height="100" style="display: block; margin: auto;" /> --- # Hidden layer (cont.) .pull-left[ ```r model <- keras_model_sequential() %>% * layer_dense(units = 16, * input_shape = c(784)) %>% layer_dense(units = 10) ``` ] .pull-right[ * `layer_dense`: Add a fully connected hidden layer to the neural network, i.e. there is a connection between every node in this layer and the previous. * `input_shape = c(784)`: Define the dimension of the input tensor. <br>We flatten the image into a vector of 784 nodes. * `units = 16`: Set the number of nodes in this hidden layer. ] --- # Hidden layer (cont.) .pull-left[ ```r model <- keras_model_sequential() %>% layer_dense(units = 16, input_shape = c(784)) %>% * layer_dense(units = 10) ``` ] .pull-right[ * The second `layer_dense` adds the output player with 10 nodes. ] --- # Connecting the dots .pull-left[ <!-- Densely network: All nodes in subsequent layers share a connections.--> <img src="Images/neural_network_model1_architecture2.png" width="400" height="370" style="display: block; margin: auto;" /> ] .pull-right[ A pattern of connections in the first layer will activate certain features in the hidden layer. These features in the hidden layer will in turn activate cells in the output layer. The output cell with the highest value is our predicted outcome. .hi-pink[Q]: How is the value of cells in the hidden and output layer determined? ] --- # Connecting the dots (cont.) .pull-left[ The model assigns a .hi-pink[weight] to each connection. .hi-pink[First] the model computes the .hi-pink[linear combination]: $$ z = b + w_1 x_1 + w_2 x_2 + \ldots w_n x_n = b + w \cdot x$$ An extra bias term is added, which can be seen as a node in the previous layer that is always activated. .hi-pink[Second] an .hi-pink[activation function] is applied: $$ y = f(b + w \cdot x)$$ The activation function adds non-linearity in the model. Without the activation function the model would be identical to linear regression. ] .pull-right[ <img src="Images/neural_network_connection.jpeg" width="500" height="260" style="display: block; margin: auto;" /> Source: [Arthur Arnx](https://towardsdatascience.com/first-neural-network-for-beginners-explained-with-code-4cfd37e06eaf) ] --- # Connecting the dots (cont.) <br> There are many popular choices for the activation function `\(f(.)\)` $$ y = f(z) = f(b + w \cdot x)$$ <br> .pull-left[ Many activation functions are available: * **ReLU** ] .pull-right[ * Very popular way to introduce non-linearity. * Simple derivative (fast calibration). * Not used in the output layer. <img src="Images/neural_networks_unnamed-chunk-32-1.png" width="300" height="200" style="display: block; margin: auto;" /> $$ f(z) = \max(z, 0)$$ ] --- # Connecting the dots (cont.) <br> There are many popular choices for the activation function `\(f(.)\)` $$ y = f(z) = f(b + w \cdot x)$$ <br> .pull-left[ Many activation functions are available: * ReLU * **identity: linear regression** ] .pull-right[ * Only used in the output layer. <img src="Images/neural_networks_unnamed-chunk-33-1.png" width="300" height="200" style="display: block; margin: auto;" /> $$ f(z) = z$$ ] --- # Connecting the dots (cont.) <br> There are many popular choices for the activation function `\(f(.)\)` $$ y = f(z) = f(b + w \cdot x)$$ <br> .pull-left[ Many activation functions are available: * ReLU * identity: linear regression * **sigmoid: binary classification** ] .pull-right[ * Transform `\(z\)` to `\((0, 1)\)`. * Focus on values around zero. <img src="Images/neural_networks_unnamed-chunk-34-1.png" width="300" height="200" style="display: block; margin: auto;" /> $$ f(z) = \frac{1}{(1+e^{-z})}$$ ] --- # Connecting the dots (cont.) <br> There are many popular choices for the activation function `\(f(.)\)` $$ y = f(z) = f(b + w \cdot x)$$ <br> .pull-left[ Many activation functions are available: * ReLU * identity: linear regression * sigmoid: binary classification * **softmax: multi-class classification** ] .pull-right[ * Normalize the sum of the nodes in a layer to one. * Depends on all values `\(z\)` of the previous layer. * Only used in the output layer. `$$y_k = \frac{e^{z_{k}}}{\sum_j e^{z_j}}.$$` ] --- # Connecting the dots (cont.) <br> There are many popular choices for the activation function `\(f(.)\)` $$ y = f(z) = f(b + w \cdot x)$$ <br> .pull-left[ Many activation functions are available: * ReLU * identity: linear regression * sigmoid: binary classification * softmax: multi-class classification * **other activation functions** ] .pull-right[ See the [Keras documentation](https://keras.io/activations/): * elu * selu * softplus * softsign * tanh * hard_sigmoid * exponential * leaky ReLU * PReLU * Threshold ReLU ] --- # Connecting the dots (cont.) <br> How can our network detect a horizontal line at the top of the image? .pull-left[ Each node in the hidden layer is connected with the 784 (28x28) nodes in the input layer. We can visualize these weights in a (28x28) image: <img src="Images/neural_network_horizontal_stroke.png" width="300" height="250" style="display: block; margin: auto;" /> ] .pull-right[ For the image on the left, the weighted sum is maximal when there is a horizontal line at the top: `$$z = b + w \cdot w.$$` ] --- # Defining a neural network in {keras} .pull-left[ ```r model <- * keras_model_sequential() ``` ] .pull-right[ We construct the neural network by sequentially adding layers. ] --- # Defining a neural network in {keras} .pull-left[ ```r model <- keras_model_sequential() %>% * layer_dense(units = 16, * activation = 'sigmoid', * input_shape = 784) ``` ] .pull-right[ Specify the number of input nodes as a parameter in the first layer of the model. Add a fully connected, dense layer with 16 nodes. <br> Many [other layer types](https://keras.io/layers/core/) are available. This layer uses the sigmoid activation function. ] --- # Defining a neural network in {keras} .pull-left[ ```r model <- keras_model_sequential() %>% layer_dense(units = 16, activation = 'sigmoid', input_shape = 784) %>% * layer_dense(units = 10, * activation = 'softmax') ``` ] .pull-right[ Add the output layer with 10 units. The `softmax` activation function forces the sum of the outputs to be one. ] --- # Defining a neural network in {keras} .pull-left[ ```r model <- keras_model_sequential() %>% layer_dense(units = 16, activation = 'sigmoid', input_shape = 784) %>% layer_dense(units = 10, activation = 'softmax') * summary(model) ``` ] .pull-right[ The model contains 12730 parameters: * 784 * 16 weights between layer one and two. * 16 bias terms in layer two. * 16 * 10 weights between layer two and three. * 10 bias terms in layer three. ] ``` ## Model: "sequential" ## ___________________________________________________________________________ ## Layer (type) Output Shape Param # ## =========================================================================== ## dense (Dense) (None, 16) 12560 ## ___________________________________________________________________________ ## dense_1 (Dense) (None, 10) 170 ## =========================================================================== ## Total params: 12,730 ## Trainable params: 12,730 ## Non-trainable params: 0 ## ___________________________________________________________________________ ``` --- # Calibrating the model .pull-left[ ```r model <- model %>% * compile(loss = "categorical_crossentropy", optimize = optimizer_rmsprop(), metrics = c('accuracy')) ``` Selects a loss function, distribution for the outcome. Keras includes many common losses: * `"mse"`: gaussian * `"poisson"`: poisson * `"binary_crossentropy"`: bernoulli, non-exclusive classes * `"categorical_crossentropy"`: bernoulli, exclusive classes * other distributions, see the [keras documentation](https://keras.io/losses/) ] .pull-right[ Or define your own loss function ```r mse <- function(y_true, y_pred) { k_mean((y_true - y_pred)^2, axis = 2) } model <- model %>% compile( * loss = mse, optimizer = optimizer_rmsprop(), metrics = c('accuracy')) ``` * `k_mean` is the keras implementation of `mean` that take a tensor as input. * `axis = 2` calculates the mean over the different output nodes. ] --- # Calibrating the model (cont.) .pull-left[ ```r model <- model %>% compile(loss = "categorical_crossentropy", * optimize = optimizer_rmsprop(), metrics = c('accuracy')) ``` Neural networks typically have many degrees of freedom. Keras includes several optimizers for finding a (local) minimum of the loss function. Popular choices are: * `optimizer_rmsprop()` * `optimizer_adam()` * other optimizers, see the [keras documentation](https://keras.io/optimizers/) ] .pull-right[ All optimizers more or less do gradient descent $$w_i = w_i - \delta \cdot \frac{\partial \mathcal{L}}{\partial w_i}, $$ where `\(\mathcal{L}\)` is the loss function and `\(w_i\)` is one of the many tuning parameters and `\(\delta\)` is the learning rate. The gradient, `\(\frac{\partial \mathcal{L}}{\partial w_i}\)`, is calculated using a technique called backpropagation, i.e. a fancy name for the chain rule from mathematics. ] --- # Calibrating the model (cont.) .pull-left[ ```r model <- model %>% compile(loss = "categorical_crossentropy", optimize = optimizer_rmsprop(), * metrics = c('accuracy')) ``` In additition to the loss function, other performance measures can be tracked while calibrating the model. * `accuracy` (= categorical_accuracy) * `binary_accuracy` * `categorical_accuracy` * `sparse_categorical_accuracy` * `top_k_categorical_accuracy` * `sparse_top_k_categorical_accuracy` * `cosine_proximity` * any loss function ] .pull-right[ <img src="Images/neural_network_metrics.png" width="400" height="400" style="display: block; margin: auto;" /> ] --- # Preparing the MNIST dataset We .hi-pink[flatten] the image data (28x28 matrix) into a vector of length 784: ```r input <- array_reshape(input, c(nrow(input), 28*28)) / 255 test_input <- array_reshape(test_input, c(nrow(test_input), 28*28)) / 255 ``` Later in this course we will see how we can analyze this data .hi-pink[without flattening]! We construct 10 dummy variables (0-9) for the output of the model: ```r output <- to_categorical(output, 10) test_output <- to_categorical(test_output, 10) ``` The R-script includes a function `plot_figure` to visualize the input: ```r plot_image(input[17, ]) ``` <img src="Images/neural_networks_plot_image_function-1.png" width="50" height="50" style="display: block; margin: auto;" /> --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> As discussed, any .KULbginline[loss function] can be used as a .KULbginline[metric]. <br> In the case of the MNIST dataset, we search for a model with a high .KULbginline[accuracy], i.e. the likelihood that the model assigns the highest probabililty to the correct output node. .hi-pink[Q]: Why can we not use .KULbginline[accuracy] as our loss function? ] --- # Calibrating the model (cont.) `fit(.)` tunes the model parameters (weights and biasses). <br> There is no need to save the result as the input model gets updated automatically. .pull-left[ ```r model %>% * fit(input, * output, batch_size = 128, epochs = 10, validation_split = 0.2) ``` ] .pull-right[ The first arguments are the input data (images) and the corresponding class (0-9). ] --- # Calibrating the model (cont.) `fit(.)` tunes the model parameters (weights and biasses). <br> There is no need to save the result as the input model gets updated automatically. .pull-left[ ```r model %>% fit(input, output, * batch_size = 128, * epochs = 10, validation_split = 0.2) ``` Parameter updates are calculated based on small subsets of the training data with `batch_size` elements. An `epoch` is one iteration of the algorithm over the full dataset. ] .pull-right[ <img src="Images/neural_network_epoch.gif" width="400" height="300" style="display: block; margin: auto;" /> .right[Source: [Bradley Boehmke](https://github.com/rstudio-conf-2020/dl-keras-tf)] ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> You will now .KULbginline[design] and .KULbginline[calibrate] your own neural network for the MNIST dataset. As a form of paralellized model selection, you will all use different model parameters. This way we gain insight into which parameter values work well for this dataset. <br> <br> .KULbginline[Base model:] Neural network with a single hidden layer with 11-20 nodes. <br> <br> Try some of the following ideas to improve the model: (more ideas on the next slide!) .KULbginline[Adding hidden layers.] <br> <i class="fas fa-lightbulb", style="color:#116E8A"></i> The number of nodes in subsequent layers should decrease. .KULbginline[Changing the batch size.] .KULbginline[Changing the activation function.] ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> .KULbginline[Adding some new layer types:] * [`layer_gaussian_noise`](https://keras.io/layers/noise/): adds gaussian noise N(0, stddev) to the nodes when training the model. This reduces the probability of overfitting. ```r model <- model %>% layer_gaussian_noise(stddev) ``` * [`layer_dropout`](https://keras.io/layers/core/): Sets a fraction `rate` of the input units to zero. This reduces the probability of overfitting. ```r model <- model %>% layer_dropout(rate) ``` * [`layer_batch_normalization`](https://keras.io/layers/normalization/): centers and scales the values of each node in the previous layer. ```r model <- model %>% layer_batch_normalization() ``` ] --- # Model evaluation .pull-left[ `evaluate(.)` calculates losses and metrics for the test dataset. ```r model %>% evaluate(test_input, test_output, verbose = 0) ``` ``` ## $loss ## [1] 0.2336413 ## ## $accuracy ## [1] 0.9341 ``` ] .pull-right[ `predict(.)` returns a vector of length 10 with the probability per output node. ```r prediction <- model %>% predict(test_input) round(prediction[1, ], 3) ``` ``` ## [1] 0.000 0.000 0.001 0.003 0.000 0.000 0.000 0.995 0.000 0.001 ``` The predicted category is the node with the highest probability. ```r category <- apply(prediction, 1, which.max)-1 actual_category <- apply(test_output, 1, which.max)-1 ``` ] --- # Model evaluation (cont.) We inspect the misclassified images to gain more insight in the model. .pull-left[ ```r head(which(actual_category != category)) ``` ``` ## [1] 9 34 39 64 88 93 ``` ] .pull-right[ ```r index <- 9 plot_image(test_input[index, ]) + ggtitle(paste( 'actual: ', actual_category[index], ' predicted: ', category[index], sep='')) + theme(legend.position = 'none', plot.title = element_text(hjust = 0.5)) ``` ] <img src="Images/neural_network_misclassification.png" width="700" height="300" style="display: block; margin: auto;" /> --- # Model evaluation (cont.) We inspect the images for which the model asigns the lowest probability to the correct class. ```r # select per row, the probability corresponding to the correct class prob_correct <- prediction[cbind(1:nrow(prediction), actual_category+1)] # get the index of the 5 lowest records in prob_correct which(rank(prob_correct) <= 5) ``` ``` ## [1] 1501 5735 6167 6506 6652 ``` <img src="Images/neural_network_worst.png" width="700" height="300" style="display: block; margin: auto;" /> --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> You will now .KULbginline[evaluate] your own model! <br><br> 1. Calculate the accuracy of your model on the test set. <br> <br> 2. Visualize some of the misclassified images from your model. <br><br> 3. Generate an image consisting of random noise and let the model classify this image. What do you think of the results? <br> <i class="fas fa-lightbulb", style="color:#116E8A"></i> The input should be a 1x784 matrix with values in [0, 1]. ] --- # Feeding random data to a neural network .pull-left[ ```r random <- matrix(runif(28^2), nrow = 1) plot_image(random[1, ]) ``` <img src="Images/neural_networks_unnamed-chunk-64-1.png" width="360" height="360" style="display: block; margin: auto;" /> ] .pull-right[ ```r round(predict(model, random), 3) ``` ``` ## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] ## [1,] 0 0 0.617 0.124 0.002 0.196 0.042 0.001 0.018 0 ``` The model is pretty sure that the input on the left is a three! ] --- # Model understanding Inspecting the calibrated weights can provide some insight in the features created in the hidden layer. .pull-left[ Every node in the first hidden layer has 784 connections with the input layer. The weights of these connections can be visualized as an 28x28 image. ```r node <- 9 layer <- 1 weights <- model$weights[[2*(layer-1) + 1]][, node] plot_image(as.numeric(weights)) ``` ] .pull-right[ Visualization of the calibrated weights for the 16 nodes in the first hidden layer. <img src="Images/neural_network_calibrated_weights.png" width="400" height="400" style="display: block; margin: auto;" /> ] --- # Summary of the fundamentals .pull-left[ * Define neural networks .KULbginline[sequentially] in {keras} `keras_model_sequential` * Layers consist of .KULbginline[nodes] and .KULbginline[connections] * The vanilla choice is a .KULbginline[fully connected layer] `layer_dense` ] .pull-right[ * In building a neural network we can .KULbginline[tune]: - the number of layers - the number of nodes per layer - the activation functions - the layer type (more on this coming soon) - the loss function - the optimization algorithm - the batch size - the number of epochs ] --- # Meet the neural networks family <div style='height:100%; overflow:scroll;'> <img src = "Images/neural_network_types.png" /> </div> --- class: inverse, center, middle name: autoencoder # Auto encoders <html><div style='float:left'></div><hr color='#FAFAFA' size=1px width=796px></html> --- # Auto encoders .KULbginline[Auto encoders] compress the input data into a limited number of features. .pull-left[ <img src="Images/neural_network_auto_encoder.png" width="300" height="400" style="display: block; margin: auto;" /> ] .pull-right[ * .KULbginline[Unsupervised] machine learning algorithm. * .KULbginline[Decorrelation] of the input data, comparable with PCA. The low dimensional compressed data is often used as an input in traditional statistical models. * Input and output are identical. * Few nodes in the center of the network. This is the compressed feature space. * A high performing auto encoder is capable of reconstructing the input data based on compressed feature space. ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ Auto encoders can be implemented in Keras using the same tools that you have already learned during this course. <br> The following steps guide you in .KULbginline[constructing] and .KULbginline[training] your personal auto encoder for the MNIST dataset. * Make a sketch of the neural network that you will implement. * Define a neural network with 5 layers: * Layer 1: input (784 nodes) * Layer 2: Hidden layer (128 nodes) * Layer 3: Hidden layer (32 nodes), this is the compressed feature space * Layer 4: Hidden layer (128 nodes) * Layer 5: Output layer (784 nodes) * Choose an appropriate activation function for each layer: * identity * ReLU * sigmoid * softmax ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ * Which of these loss functions can we use to train the model? * mse * binary_crossentropy * categorical_crossentropy * Fit the model on the MNIST data in 10 epochs. * Experiment with adding other layer types to the model: * layer_gaussian_noise(stddev) * layer_dropout(rate) * layer_batch_normalization() ] --- class: clear .pull-left[ ```r *encoder <- keras_model_sequential() %>% layer_dense(units = 128, activation = 'sigmoid', input_shape = c(784)) %>% layer_dense(units = 32, activation = 'sigmoid') *model <- encoder %>% layer_batch_normalization() %>% layer_dense(units = 128, activation = 'sigmoid') %>% layer_dense(units = 784, activation = 'sigmoid') %>% compile(loss = 'binary_crossentropy', optimize = optimizer_rmsprop(), metrics = c('mse')) model %>% fit(input, input, epochs = 10, batch_size = 256, shuffle=TRUE, validation_split = 0.2) ``` ] .pull-right[ `encoder` contains the first part of the model for compressing the model. `model` is the full auto encoder, including the encode and decode step. By defining `model` as an extension of `encoder`, we can compress the data using `predict(encoder, ...)` after training the model. ] --- class: clear .pull-left[ ```r encoder <- keras_model_sequential() %>% layer_dense(units = 128, activation = `'sigmoid'`, input_shape = c(784)) %>% layer_dense(units = 32, activation = `'sigmoid'`) model <- encoder %>% layer_batch_normalization() %>% layer_dense(units = 128, activation = `'sigmoid'`) %>% layer_dense(units = 784, activation = `'sigmoid'`) %>% compile(loss = `'binary_crossentropy'`, optimize = optimizer_rmsprop(), metrics = c('mse')) model %>% fit(input, input, epochs = 10, batch_size = 256, shuffle=TRUE, validation_split = 0.2) ``` ] .pull-right[ I interpret the hidden nodes as binary features and therefore use a `sigmoid` activation function. We no longer use the `softmax` activation function in the last layer, since multiple output nodes can be activated simultaneously. I choose `binary_crossentropy` as a loss function, since we have independent bernoulli outcome variables. Another good combination would have been: * activation `ReLU` in the hidden layers * activation `identity` in the output layer * `mse` as the loss function ] --- class:clear .pull-left[ ```r encoder <- keras_model_sequential() %>% layer_dense(units = 128, activation = 'sigmoid', input_shape = c(784)) %>% layer_dense(units = 32, activation = 'sigmoid') model <- encoder %>% layer_batch_normalization() %>% layer_dense(units = 128, activation = 'sigmoid') %>% layer_dense(units = 784, activation = 'sigmoid') %>% compile(loss = 'binary_crossentropy', optimize = optimizer_rmsprop(), metrics = c('mse')) model %>% * fit(input, * input, epochs = 10, batch_size = 256, * shuffle=TRUE, validation_split = 0.2) ``` ] .pull-right[ The `input` variable is also passed to the model as the `output` parameter. The option `shuffle=TRUE` shuffles the training dataset after each epoch, such that the model is trained on different batches. ] --- # The big test Let's compare the input and output of our auto encoder. ```r result <- predict(model, input[1, , drop = FALSE]) plot_image(input[1, ]) # the original image plot_image(result[1, ]) # the reconstruction of the model ``` <img src="Images/neural_network_auto_encoder_comparison.png" width="750" height="370" style="display: block; margin: auto;" /> --- # What happens with random noise? ```r random <- matrix(runif(28^2), nrow = 1) grid.arrange( plot_image(random[1, ]) + theme(legend.position = 'none'), plot_image(predict(model, random)[1, ]) + theme(legend.position = 'none'), nrow = 1) ``` <img src="Images/neural_network_auto_encoder_noise.png" width="500" height="300" style="display: block; margin: auto;" /> --- class: inverse, center, middle name: cnn # Convolutional neural networks (CNN) <html><div style='float:left'></div><hr color='#FAFAFA' size=1px width=796px></html> --- # Convolutional neural networks (CNN) .pull-left[ So far, the first step in our analysis was to flatten the image matrix into a vector. ```r input <- array_reshape(input, c(nrow(input), 28*28)) / 255 ``` This approach * is not translation invariant. A completely different set of nodes gets activated when the image is shifted. * ignores the dependency between nearby pixels. * requires a large number of parameters/weights as each node in the first hidden layer is connected to all nodes in the input layer. .KULbginline[Convolutional layers] allow to handle dimensional data, .hi-pink[without] flattening. ] .pull-right[ <img src="Images/neural_network_flattening.png" width="300" height="350" style="display: block; margin: auto;" /> .right[Source: [Sumit Saha](https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53)] ] --- # Convolutional layers Classical hidden layers use .hi-pink[1 dimensional inputs] to construct .hi-pink[1 dimensional features]. 2d convolutional layers use .hi-pink[2 dimensional input] (images) to construct .hi-pink[2 dimensional feature maps]. .pull-left[ The weights in a 2d convolutional layer are structured in a small image, called the kernel or the filter. <img src="Images/neural_network_convolution_op1.png" width="300" height="200" style="display: block; margin: auto;" /> ] .pull-right[ We slide the kernel over the input image and compute matrix multiplications between the selected part of the image and the kernel. <img src="Images/neural_network_convolution_op2.png" width="400" height="250" style="display: block; margin: auto;" /> .right[Source: [Bradley Boehmke](https://github.com/rstudio-conf-2020/dl-keras-tf)] ] --- # Convolutional layers (cont.) Classical hidden layers use .hi-pink[1 dimensional inputs] to construct .hi-pink[1 dimensional features]. 2d convolutional layers use .hi-pink[2 dimensional input] (images) to construct .hi-pink[2 dimensional feature maps]. .pull-left[ The weights in a 2d convolutional layer are structured in a small image, called the kernel or the filter. <img src="Images/neural_network_convolution_op1.png" width="300" height="200" style="display: block; margin: auto;" /> ] .pull-right[ We slide the kernel over the input image and compute matrix multiplications between the selected part of the image and the kernel. <img src="Images/neural_network_convolution_op3.gif" width="400" height="250" style="display: block; margin: auto;" /> .right[Source: [Bradley Boehmke](https://github.com/rstudio-conf-2020/dl-keras-tf)] ] --- # Convolutional layers (cont.) 2d convolutional layers can .hi-pink[detect] the same, .hi-pink[local feature anywhere] in the image. .pull-left[ A useful feature for classifying the number four is the presence of straight, vertical lines. .hi-pink[Q]: How should the kernel look to detect this feature? ] .pull-right[ <img src="Images/neural_network_mnist4.png" width="200" height="200" style="display: block; margin: auto;" /> ] --- # Convolutional layers (cont.) 2d convolutional layers can .hi-pink[detect] the same, .hi-pink[local featur anywhere] in the image. .pull-left[ A useful feature for classifying the number four is the presence of straight, .hi-pink[vertical lines]. .hi-pink[Q]: How should the .hi-pink[kernel] look to detect this feature? <img src="Images/neural_networks_unnamed-chunk-83-1.png" width="200" height="200" style="display: block; margin: auto;" /> ] .pull-right[ original image: <img src="Images/neural_network_mnist4.png" width="200" height="200" style="display: block; margin: auto;" /> feature map: <img src="Images/neural_network_feature_map.png" width="200" height="200" style="display: block; margin: auto;" /> ] --- # Convolutional layers in {keras} .pull-left[ ```r keras_model_sequential() %>% layer_conv_2d(filters = 8, kernel_size = c(3, 3), strides = c(1, 1), input_shape = c(28, 28, 1)) ``` ] .pull-right[ * `filters = 8`: We construct .hi-pink[8 feature maps] associated to different kernels/.hi-pink[filters]. * `kernel_size = c(3, 3)`: The filter/.hi-pink[kernel] has a size of .hi-pink[3x3]. * `strides = c(1, 1)`: We .hi-pink[move] the .hi-pink[kernel] in steps of .hi-pink[1] pixel in both the horizontal and vertical direction. This is the most common choice. * `input_shape = c(28, 28, 1)`: If this is the first layer of the model, we also have to specify the dimensions of the input data. The input consists of .hi-pink[1 image] of size .hi-pink[28x28]. ] --- # Pooling A convolution layer is typically followed by a .hi-pink[pooling step], which reduces the size of feature maps. .pull-left[ .hi-pink[Pooling layers] divide the image in blocks of equal size and then aggregate the data per block. Two common operations are: * Average pooling ```r layer_average_pooling_2d(pool_size = c(2, 2), strides = c(2, 2)) ``` * Max pooling ```r layer_max_pooling_2d(pool_size = c(2, 2), strides = c(2, 2)) ``` ] .pull-right[ <img src="Images/neural_network_pooling.jpg" width="380" height="240" style="display: block; margin: auto;" /> * `pool_size = c(2, 2)`: Pool blocks of 2x2 * `strides = c(2, 2)`: Move in steps of size 2 in both the horizontal and vertical direction. ] --- # Flatten .pull-left[ ```r keras_model_sequential() %>% layer_conv_2d() %>% layer_max_pooling_2d() %>% * layer_flatten() %>% layer_dense() ``` ] .pull-right[ Finally, when all local features are extracted the data is flattened. A classical neural network (as built in the first part of these course) analyzes these local features. ] ```r input_conv <- mnist$train$x / 255 input_conv <- k_expand_dims(input_conv, axis = 4) test_input_conv <- mnist$test$x / 255 test_input_conv <- k_expand_dims(test_input_conv, axis = 4) ``` ```r model <- load_model_tf("model_c") ``` --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ .KULbginline[Fit] and .KULbginline[evaluate] a .KULbginline[convolutional neural network] on the MNIST dataset. Before you start, you should restructure the input and test dataset in the required format. ```r # We start from the original data input_conv <- mnist$train$x / 255 # We add a fourth dimension, such that one data point has size (28x28x1) input_conv <- k_expand_dims(input_conv, axis = 4) ``` Once you are satisfied with the performance of your model, you can plot some images that were misclassified by your model. ] --- class:clear .pull-left[ ```r model <- keras_model_sequential() %>% layer_conv_2d(filters = 8, kernel_size = 3, input_shape = c(28, 28, 1)) %>% layer_max_pooling_2d(pool_size = 2) %>% layer_flatten() %>% layer_dense(units = 10, activation = 'softmax') %>% compile(loss = 'categorical_crossentropy', optimize = optimizer_rmsprop(), metrics = c('accuracy')) model %>% fit(input_conv, output, epochs = 10, batch_size = 128, validation_split = 0.2) model %>% evaluate(test_input_conv, test_output,verbose = 0) ``` ] .pull-right[ ``` ## $loss ## [1] 0.1224037 ## ## $accuracy ## [1] 0.9659 ``` Model accuracy has increased significantly and improves further with more epochs. ] --- # Misclassifications ```r prediction <- model %>% predict(test_input_conv) category <- apply(prediction, 1, which.max)-1 actual_category <- apply(test_output, 1, which.max)-1 head(which(actual_category != category)) ``` ``` ## [1] 9 19 93 248 260 319 ``` ```r plot_image(test_input[93, ]) ``` <img src="Images/neural_network_conv_wrong.png" width="700" height="300" style="display: block; margin: auto;" /> --- # What happens to random data? .pull-left[ ```r random <- runif(28*28) random_conv <- matrix(random, nrow = 28, ncol = 28) random_conv <- k_expand_dims(random_conv, axis = 1) random_conv <- k_expand_dims(random_conv, axis = 4) plot_image(random) ``` <img src="Images/neural_networks_unnamed-chunk-101-1.png" width="300" height="300" style="display: block; margin: auto;" /> ] .pull-right[ There is not a single doubt, this has to be a nine! ```r predict(model, random_conv) ``` ``` ## [,1] [,2] [,3] [,4] [,5] ## [1,] 7.361005e-15 2.655474e-30 2.406724e-12 2.888013e-07 5.990156e-18 ## [,6] [,7] [,8] [,9] [,10] ## [1,] 3.955061e-15 6.562733e-10 2.638563e-21 0.9999998 2.079794e-08 ``` Actually almost all random images will be classified as a nine. ] --- # Inspecting the filter/kernel The third filter/kernel is the one we infered for detecting vertical lines. Can you find an interpretation for the other kernels? ```r require(tidyverse) require(gridExtra) weights <- map(1:8, function(x) {plot_image(as.numeric(model$weights[[1]][,,,x]), FALSE)}) weights[['nrow']] <- 2 do.call(grid.arrange, weights) ``` <img src="Images/neural_network_conv_kernel.png" width="600" height="300" style="display: block; margin: auto;" /> --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ We have now built .KULbginline[convolutional neural] networks using .hi-pink[layer_conv_2d()]. <br>In addition {keras} defines .hi-pink[layer_conv_1d()] and .hi-pink[layer_conv_3d()]. <br> <br> .hi-pink[Q]: For which data would you use .hi-pink[layer_conv_1d()]? <br> .hi-pink[Q]: Which function would you use to build a convolutional network for: * Colored images <br> * Movie data ] --- class: inverse, center, middle name: regression # Regression <html><div style='float:left'></div><hr color='#FAFAFA' size=1px width=796px></html> --- # Regression with neural networks <br> We predict the .KULbginline[claim frequency] and .KULbginline[severity] in the MTPL dataset with a .KULbginline[neural network]. .pull-left[ Load the dataset: ```r mtpl_orig <- read.table('./data/P&Cdata.txt', header = TRUE) mtpl_orig <- as_tibble(mtpl_orig) mtpl <- mtpl_orig %>% rename_all(function(.name) {.name %>% tolower }) %>% rename(expo = exp) ``` ] .pull-right[ Create a training and test set: ```r require(rsample) data_split <- initial_split(mtpl) mtpl_train <- training(data_split) mtpl_test <- testing(data_split) mtpl_train <- mtpl_train[sample(nrow(mtpl_train)), ] ``` ] --- # Regression with neural networks (cont.) <br> On Day 1 we fitted GLM regression models $$ \color{#FFA500}{Y} \sim \texttt{Poisson}(\lambda = \exp( \color{#e64173}{x}^{'}\color{#20B2AA}{\beta})).$$ <br> We now reconstruct this model as a neural network: .center[ Formula | GLM | Neural network ------------- | ------------- `\(\color{#FFA500}{Y}\)` | response | output node Poisson | distribution | loss function exp | inverse link function | activation function `\(\color{#e64173}{x}\)` | predictors | input nodes `\(\color{#20B2AA}{\beta}\)` | fitted effect | weights ] --- # Your first NN regression model Let's start with a model with only an intercept: $$ \color{#FFA500}{Y} \sim \texttt{Poisson}(\lambda = \exp( \color{#e64173}{1} \cdot \color{#20B2AA}{\beta})).$$ .pull-left[ ```r claim_count_model <- keras_model_sequential() %>% `layer_dense`(`units = 1`, activation = 'exponential', `input_shape = c(1)`, use_bias = FALSE) %>% compile(loss = 'poisson', optimize = optimizer_rmsprop(), metrics = c('mse')) ``` ] .pull-right[ * `layer_dense`: There are no hidden layers, the input layer is directly connected to the output layer. * `units = 1`: There is a single output node. * `input_shape = c(1)`: There is one input covariate, i.e. the intercept which will be constant one. ] --- # Your first NN regression model (cont.) Let's start with a model with only an intercept: $$ \color{#FFA500}{Y} \sim \texttt{Poisson}(\lambda = \exp( \color{#e64173}{1} \cdot \color{#20B2AA}{\beta})).$$ .pull-left[ ```r claim_count_model <- keras_model_sequential() %>% layer_dense(units = 1, `activation = 'exponential'`, input_shape = c(1), `use_bias = FALSE`) %>% compile(`loss = 'poisson'`, optimize = optimizer_rmsprop(), metrics = c('mse')) ``` ] .pull-right[ * `use_bias = FALSE`: We don't need a bias, since we explicitely include a constant covariate. * `activation = 'exponential'`: We use an exponential inverse link function. * `loss = 'poisson'`: We minimize the poisson likelihood ] --- # Your first NN regression model (cont.) .pull-left[ Prepare the input and output of the neural network.<br>Both should be of type .hi-pink[matrix] or tensor. ```r # (n x 1) matrix of constant one. input <- matrix(1, nrow = nrow(mtpl_train), ncol = 1) input_test <- matrix(1, nrow = nrow(mtpl_test), ncol = 1) # (n x 1) matrix with the claim counts output <- matrix(mtpl_train %>% pull(nclaims), nrow = nrow(mtpl_train), ncol = 1) output_test <- matrix(mtpl_test %>% pull(nclaims), nrow = nrow(mtpl_test), ncol = 1) ``` ] .pull-right[ Calibrate the neural network: ```r claim_count_model %>% fit(input, output, epochs = 20, batch_size = 1024, validation_split = 0) ``` * `validation_split = 0`: We don't use a validation set, because we will compare the results with the glm fit. <img src="Images/neural_network_count_convergence.png" width="300" height="150" style="display: block; margin: auto;" /> ] --- # Comparing our neural network with a GLM We compare the results of our neural network with the same model specified as a GLM. .pull-left[ ```r glm_fit <- glm(nclaims ~ 1, data = mtpl_train, family = poisson(link = log)) glm_fit ``` ``` ## ## Call: glm(formula = nclaims ~ 1, family = poisson(link = log), data = mtpl_train) ## ## Coefficients: ## (Intercept) ## -2.087 ## ## Degrees of Freedom: 122423 Total (i.e. Null); 122423 Residual ## Null Deviance: 67510 ## Residual Deviance: 67510 AIC: 95860 ``` ] .pull-right[ ```r claim_count_model$weights ``` ``` ## [[1]] ## <tf.Variable 'dense_2/kernel:0' shape=(1, 1) dtype=float32, numpy=array([[-2.0794141]], dtype=float32)> ``` <br> There is a small difference in the parameter estimate, resulting from a different optimization technique. .center[ | | (Intercept) | | ---- | ------------- | | GLM | -2.088 | | Neural network | -2.079| ] ] --- # Comparing our neural network with a GLM (cont.) .pull-left[ We implement the Poisson loss function: $$ P(\color{#FFA500}{Y} = k) = \frac{e^{-\lambda}\lambda^k}{k!} $$ $$ \log(P(\color{#FFA500}{Y} = k)) = -\lambda + k \cdot \log(\lambda) - \log(k!) $$ ```r poisson_loss <- function(pred, actual) { mean(pred - actual * log(pred)) } poisson_loss(predict(glm_fit, mtpl_test, type = 'response'), mtpl_test$nclaims) ``` ``` ## [1] 0.3824852 ``` ] .pull-right[ ```r evaluate(claim_count_model, input_test, output_test, verbose = FALSE) ``` ``` ## $loss ## [1] 0.3824905 ## ## $mse ## [1] 0.1366765 ``` .center[ | | In-sample loss | Out-of-sample loss | | ---- | ------------- | -------- | | GLM | 0.3829 | 0.3831 | | Neural network | 0.3829 | 0.3831 | ] ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ We have shown that a Poisson GLM can be implemented as a neural network. <br> 1. Can you adapt this code to replicate a binomial GLM with a logit link function.<br> <i class="fas fa-lightbulb", style="color:#116E8A"></i> The `sigmoid` activation function is the inverse of the logit link function. 2. Calibrate the model defined in 1 on the outcome variable (nclaims > 0), i.e. modelling no claim versus having at least one claim. Add accuracy as a metric in your model. 3. Compare your fitted neural network with a glm model. ] --- class:clear .pull-left[ ```r claim_model_binair <- keras_model_sequential() %>% layer_dense(units = 1, activation = `'sigmoid'`, input_shape = c(1), use_bias = FALSE) %>% compile(loss = `'binary_crossentropy'`, optimize = optimizer_rmsprop(), metrics = c('accuracy')) claim_model_binair %>% fit(input, `output > 0`, epochs = 40, batch_size = 1024, validation_split = 0) glm_binair <- glm((nclaims > 0) ~ 1, data = mtpl_train, family = binomial(link = logit)) ``` ] .pull-right[ The `sigmoid` activation function is the inverse of the logit link function. `binary_crossentropy` minimizes the loglikelihood of bernoulli distributed random variables: $$ -\frac{1}{n} \sum_{i=1}^n (\color{#FFA500}{y_i} \cdot \log(p_i) + (1-\color{#FFA500}{y_i}) \cdot \log(1-p_i)).$$ ] --- # Adding exposure Each observation in the MTPL dataset has an .KULbginline[exposure] associated to it. .pull-left[ The loss function, including exposure, is $$ \mathcal{L} = \sum_i \texttt{expo}_i \cdot \lambda_i - k_i \cdot \log(\texttt{expo}_i \cdot \lambda_i),$$ where: * `\(\texttt{expo}_i\)` is the exposure of observation `\(i\)`, * `\(\lambda_i\)` is the fitted intensity, * `\(k_i\)` is the observed claim count. ] .pull-right[ We rewrite this loss function as: `\begin{align} \mathcal{L} &= \sum_i \texttt{expo}_i \cdot \lambda_i - k_i \cdot \log(\texttt{expo}_i \cdot \lambda_i) \\ &= \texttt{expo}_i \cdot (\lambda_i - \frac{k_i}{\texttt{expo}_i} \log(\lambda_i)). \end{align}` This is the loss function for a Poisson regression model with: * observations `\(\frac{k_i}{\texttt{expo}_i}\)`, * weights `\(\texttt{expo}_i\)`. ] The parameter estimates of the following two GLMs are identical: ```r glm(nclaims ~ offset(log(expo)) + ageph, family = poisson, data = mtpl_train) glm(nclaims / expo ~ ageph, family = poisson, data = mtpl_train, weights = expo) ``` --- # Adding exposure (cont.) .pull-left[ ```r *exposure <- as.numeric(mtpl_train %>% pull(expo), * nrow = nrow(mtpl_train), * ncol = 1) claim_count_model <- keras_model_sequential() %>% layer_dense(units = 1, activation = 'exponential', input_shape = c(1), use_bias = FALSE) %>% compile(loss = 'poisson', optimize = optimizer_rmsprop(), metrics = c('mse')) claim_count_model %>% fit(input, * output / exposure, epochs = 20, batch_size = 1024, validation_split = 0, * sample_weight = exposure) ``` ] .pull-right[ We create a vector of exposures with the same length as our training dataset. The model does not change, only the callibration procedure. We divide the output by the exposure and include the exposure vector as weights. ] --- # Adding exposure (cont.) .pull-left[ ```r *exposure_test <- k_constant(mtpl_test %>% pull(expo), * shape = c(nrow(mtpl_test))) claim_count_model %>% evaluate(input_test, output_test, * sample_weight = exposure_test, verbose = FALSE) ``` ] .pull-right[ When evaluating the model, the weights have to be provided as a 1-dimensional tensor. ] --- # Adding predictors and hidden layers We have covered the fundamentals and are ready to build some more interesting neural networks. <br> We start by adding a single covariate `ageph`. .pull-left[ ```r input <- matrix(mtpl_train %>% pull(ageph), nrow = nrow(mtpl_train), ncol = 1) claim_count_model <- keras_model_sequential() %>% * layer_batch_normalization(input_shape = 1) %>% * layer_dense(units = 5, activation = 'relu') %>% layer_dense(units = 1, activation = 'exponential', * use_bias = TRUE) %>% compile(loss = 'poisson', optimize = optimizer_rmsprop(), metrics = c('mse')) ``` ] .pull-right[ `ageph` is added to the input matrix. <br> We remove the constant one from the input matrix and instead set `use_bias = TRUE`. The first layer in the network is `layer_batch_normalization`. <br> This layer centers and scales the input variables, conform the preprocessing steps discussed on Day 1. Centering and scaling helps the model to faster converge. Our model has a single hidden layer with 5 nodes. ] --- # Adding predictors and hidden layers (cont.) .pull-left[ We train the model on the training data. ```r claim_count_model %>% fit(input, output / exposure, epochs = 20, batch_size = 1024, * validation_split = 0.2, sample_weight = exposure) ``` ] .pull-right[ Let's visualize the fitted effect for ages between 18 and 100. ```r age <- 18:100 + 0.001 age_effect <- log(claim_count_model %>% predict(age)) ggplot() + theme_bw() + geom_point(aes(age, age_effect)) ``` <img src="Images/neural_network_age_effect.png" width="300" height="300" style="display: block; margin: auto;" /> ] --- # Adding predictors and hidden layers (cont.) .pull-left[ We compare the fitted effect of age with the predictions of a `gam` model. ```r require(mgcv) gam_age <- gam(nclaims/exposure ~ s(ageph), data = mtpl_train, family = poisson, weights = exposure) df <- data.frame(ageph = age) age_effect_gam <- log( predict(gam_age, newdata = data.frame(ageph = age), type = 'response')) ``` ] .pull-right[ The figure below includes a neural network with: * 1 hidden layer with 5 nodes * 1 hidden layer with 10 nodes * 2 hidden layers each with 5 nodes <img src="Images/neural_network_fitted_effect_age_gam.png" width="400" height="300" style="display: block; margin: auto;" /> ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ It is time to combine everything you learned during this course in a .KULbginline[case study]! <br> <br> In this case study you will predict the .KULbginline[average claim size] in the MTPL protfolio with a .KULbginline[neural network]. **Step 1**: .KULbginline[Data preparation]: * Filter the MTPL dataset on `nclaims > 0`. * Construct a random training and test split. **Step 2**: .KULbginline[Data exploration]: * Visualize the density of `avg` and `log(avg)`. * Which distribution would be suitable for modelling the claim size? Irrespective of your choice we advise to estimate a lognormal distribution.<br>If you feel very brave you could implement and optimize your own gamma loss function. ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> **Step 3**: .KULbginline[More data preparation]: * Write a recipe for preparing the input data set. <br>Check the [reference page](https://tidymodels.github.io/recipes/reference/index.html) for inspiration. * Your recipe should contain the following steps:<br> ○ Remove the variables: id, nclaims, amount, exp, town and pc.<br> ○ Normalize the data.<br> ○ Take the logarithm of avg.<br> ○ ...<br> * Bake the training and test dataset following your recipe. ] --- name: yourturn class: clear .left-column[ ## <i class="fa fa-edit"></i> <br> Your turn ] .right-column[ <br> **Step 4.** .KULbginline[Model building]: * Construct and train at least five different neural networks on the training dataset. * You can tune:<br> ○ the number of layers,<br> ○ the number of nodes per layer,<br> ○ the batch size,<br> ○ the activation functions,<br> ○ adding dropout layers,<br> ○ including batch normalization after some layers,<br> ○ ...<br> **Step 5.** .KULbginline[Model evaluation]: * Evaluate your models on the test dataset. * Compare your selected neural network with a glm or gbm. Which model is preferred? ] --- class:clear .pull-left[ Reading in the data: ```r mtpl_orig <- read.table('./data/P&Cdata.txt', header = TRUE) mtpl_orig <- as_tibble(mtpl_orig) mtpl <- mtpl_orig %>% rename_all(function(.name) {.name %>% tolower }) ``` Select only records with atleast one claim: ```r mtpl <- mtpl %>% filter(nclaims > 0) ``` ] .pull-right[ Split the data into a training and test dataset. ```r require(rsample) ``` ``` ## Loading required package: rsample ``` ```r set.seed(1) data_split <- initial_split(mtpl) mtpl_train <- training(data_split) mtpl_test <- testing(data_split) mtpl_train <- mtpl_train[sample(nrow(mtpl_train)), ] ``` ] ```r colnames(mtpl) ``` ``` ## [1] "id" "nclaims" "amount" "avg" "exp" "coverage" ## [7] "fuel" "use" "fleet" "sex" "ageph" "bm" ## [13] "agec" "power" "pc" "town" "long" "lat" ``` --- class:clear .pull-left[ Visualizing the outcome variable: ```r ggplot(mtpl_train) + theme_bw() + geom_density(aes(log(avg))) ``` The loss function for a gamma distribution: ```r gamma_loss <- function(y_true, y_pred) { k_mean(y_true / y_pred + k_log(y_pred), axis = 2) } ``` ] .pull-right[ <!-- --> ] --- class:clear .pull-left[ ```r require(recipes) mtpl_recipe <- recipe(avg ~ ., data = mtpl_train) %>% step_rm(id, nclaims, amount, exp, town, pc) %>% step_nzv(all_predictors()) %>% step_normalize(all_numeric(), -all_outcomes()) %>% step_dummy(all_nominal(), one_hot = TRUE) %>% step_log(avg) %>% prep(mtpl_train) baked_mtpl_train <- bake(mtpl_recipe, new_data = mtpl_train) baked_mtpl_test <- bake(mtpl_recipe, new_data = mtpl_test) input_mtpl_train <-select(baked_mtpl_train, -avg) %>% as.matrix() input_mtpl_test <- select(baked_mtpl_test, -avg) %>% as.matrix() output_mtpl_train <- baked_mtpl_train %>% pull(avg) output_mtpl_test <- baked_mtpl_test %>% pull(avg) ``` ] .pull-right[ * `step_rm`: Remove specific variables from the dataset. * `step_nzv`: Remove variables with near zero variance form the dataset. * `step_normalize`: Normalize all numeric predictor variables. * `step_dummy`: Construct dummy variables with one-hot encoding. * `step_log`: Take the logarithm of `avg`. ] --- class:clear .pull-left[ An first neural network, many other configuration are possible: ```r model <- keras_model_sequential() %>% * layer_dense(units = 16, activation = 'relu', input_shape = ncol(input_mtpl_train)) %>% layer_dense(units = 1, * activation = NULL) %>% * compile(loss = 'mse', optimize = optimizer_rmsprop(), metrics = c('mse')) model %>% fit( input_mtpl_train, output_mtpl_train, epochs = 30, batch_size = 256, validation_split = 0.2 ) ``` ] .pull-right[ `units = 16`: I start with a low number of nodes, since we do not have many input variables. ```r dim(input_mtpl_train) ``` ``` ## [1] 13722 13 ``` `activation = NULL`: No activation in the output layer, this is similar to linear regression. `loss = 'mse'`: We estimate a gaussian distribution for `log(avg)`. ] --- class:clear Compare the loss of the neural network and the glm. ```r evaluate(model, input_mtpl_test, output_mtpl_test, verbose = FALSE) fit_glm <- lm(avg ~ ., data = baked_mtpl_train) mean((predict(fit_glm, newdata = baked_mtpl_test) - baked_mtpl_test$avg)^2) ``` --- # Thanks! <img src="Images/xaringan.png" class="title-hex"> <br> <br> <br> <br> Slides created with the R package [xaringan](https://github.com/yihui/xaringan). <br> <br> <br> Course material available via <br> <svg style="height:0.8em;top:.04em;position:relative;fill:#116E8A;" viewBox="0 0 496 512"><path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"/></svg> https://github.com/katrienantonio/workshop-ML